There is a fascinating open peer review happening on the post-publication peer review platform PubPeer. In six days, over 70 comments have been left on the thread of our submitted article (which is already available as a preprint on the ArXiv, the article is submitted to PloS One).

The discussion started with an optimistic and supportive comment by Peer 1 stating that our paper, Stirling et al, “should finally lay to rest the whole striped nanoparticles controversy“. That comment also included the suggestion that the article should have been submitted to Nature Materials rather than PloS One which prompted an interesting discussion on the choice of journal; excerpts: Peer 2: “PLOS is open access and not for profit. I do not see that PLOSone is a “worse” journal than Nature Materials or one of the “glam” journals.” Julian: “openness should be at the heart of science, especially when things become disputed“. Philip then quoted a correspondence with an Editor who indicated that his/her journal does not publish “papers that correct, correlate, reinterpret, or in any way use existing published literature data.” That prompted further discussions as well as an excellent post entitled “Science is interpretation“at blogs.discovermagazine.com by Neuroskeptic.

The discussion then took a different turn:

Stripey nanoparticles get some defenders? https://t.co/riWKAy2MeA cc @Neuro_Skeptic @raphavisses

— PubPeer (@PubPeer) January 5, 2014

I won’t attempt to summarize the scientific arguments here. The discussion continues as of this morning. Philip and Julian went to some extreme efforts to address all the long, convoluted (and repetitive) points made by this defender, including preparing a slide show which I have already shared here in the previous post.

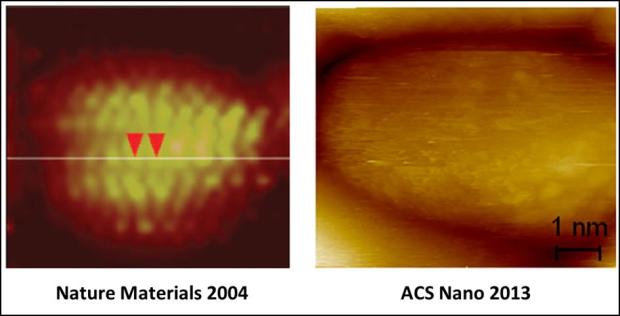

The most recent discussions have centered on the contrast between the clearly visible stripes in the early papers and their absence in the most recent articles, e.g. this (from another Unregistered user): “The proof that the 2004 experiment are artifacts is that Stellacci himself can not reproduce the STM images with clear stripes. […] Things are pretty clear to me. All these papers are just overselling bad experiments into a fancy story with nice cartoons. Period.” and this from Peer 7 “In their recent papers (ACS Nano and Langmuir 2013) when proper imaging conditions are used with their particles, the stripes are not visible at all and the authors must use complicated (and in my opinion wrong) image treatment procedures to extract a vague correlation length and do not admit that they were wrong in the first place.”

Even the “stripey defender” admits that artifacts are present in the seminal 2004 paper, but we are unlikely to see a retraction of this article. I have elaborated on that point at PubPeer and reproduce here my comment in full:

Philip above suggests that there is a “tacit admission” in the 2013 stripy papers that the 2004 results can’t be reproduced.

There is no admission in the 2013 Stellacci papers, not even a ‘tacit’ one, that the 2004 stripes may be an artifact, nor even that they can’t be reproduced, this even though:

* that was already demonstrated (but not published) by Predrag Djuranovic, Stellacci’s student at MIT, in 2005: https://raphazlab.wordpress.com/2012/12/11/seven-years-of-imaging-artifacts/

* we have already published strong arguments showing that the stripes were an artifact end of 2012 (and Francesco had read our paper already in 2009):https://raphazlab.wordpress.com/2012/11/23/stripy-nanoparticles-revisited/

* as noted by us and multiple peers above, the STM images in the 2013 papers clearly show that the 2004 stripes cannot be reproduced,

BUT, critically, the 2013 articles text explicitly says exactly the contrary, i.e. it says that the data prove the existence of the stripes observed in 2004, e.g.:

Biscarini et al: “The analysis of STM images has shown that mixed-ligand NPs exhibit a spatially correlated architecture with a periodicity of 1 nm that is independent of the imaging conditions and can be reproduced in four different laboratories using three different STM microscopes.”

“We have used the method to compare data across di?ent laboratories and validate the data acquired earlier.29, 35”

ref 29 is the 2004 Nature Materials article while ref 35 is its first follow-up, Jackson et al 2006 (JACS)Ong et al:

“The shape of these ?d?omains has a striking resemblance to the results of simulations published in ref 28.”

“In the case of NP2 the spacing that we observe is the same spacing reported for these types of particles in the past in ref 19. We believe that we image molecular features and not features related to the gold core, as high-resolution transmission electron microscopy images of these particles do not show any evidence of protrusions with these spacings (see Figure S12)”

ref 28 is Singh et al (simulations)

ref 19 is Hu et al; Hu et al 2009 which contains multiple instances of data re-use in the figures, and use the data from 2004 for its *rigorous* statistical analysis (the latter only became apparent when the data were released last year).

https://raphazlab.wordpress.com/2013/05/22/data-re-use-update/

https://raphazlab.wordpress.com/2013/05/17/some-stripy-nanoparticle-raw-data-released-today/

https://raphazlab.wordpress.com/2013/05/28/browsing-the-archive/The glaring contradiction between the what-the-data-show and what-the-authors-say-the-data-show is one among many of the extraordinary aspects of the saga. What is even more difficult to understand is that these articles passed peer review in 2013 when many of the arguments were already in the public domain.

The 2004 paper should have been retracted in 2005. Unregistered 4:42am says “If the 2004 images cannot be reproduced, that makes it clear for just about anyone to see that all of the follow on work should be reviewed by very critical eyes.” That is one reason why we are very unlikely to see an admission that the 2004 paper is based on an artifact, let alone retracted. Immediate and voluntary admission of an error would probably not having carried much of a penalty* but ten years and 30 articles later, the stakes are possibly higher. *http://www.slate.com/articles/health_and_science/science/2012/10/scientists_make_mistakes_how_astronomers_and_biologists_correct_the_record.html

I truly and genuinely do not know what will happen now in this field. Will Stirling et al -with the help of the discussion happening here and elsewhere- be more efficient at correcting the scientific record than Cesbron et al 2012, or, will we see more stripy articles in 2014 than in 2013? The experiment about science continues… Theories and predictions welcome although unfortunately there will be no possibility of reproducing the experiment.

The thread at PubPeer is an enlightening conversation. I am not a nanoparticle expert, but I am aware of this controversy since it erupted in the blogosphere, and have more or less followed the arguments since then. I was pretty much convinced that Levy, Moriarty and co-authors had sensible points against the evidence for striped nanoparticles.

However, having read the long thread of comments, it seems to me that the technical points brought up by the defender of Stellacci are serious, and that the authors are not able to refute them convincingly. I am not able to understand all of the nitty-gritty details discussed here, but I find it ludicrous that Moriarty had to apologize for his snide tone and that Stirling finds the conversation “silly” and “tedious”, and accuses the Stellacci defender of “trolling this discussion”, when he (Stirling) is not, or can’t, address the scientific criticisms.

Given that the authors are challenging a body of peer-reviewed work and accusing Stellacci of not being straightforward, they have the moral duty to at least be open to criticisms, but also patient and respectful with those who challenge their work with reasoned arguments. However, they are not behaving in this way, which is probably the reason why Stellacci and his collaborators are not engaging with them.

A controversy should gravitate towards resolution with the help of careful and respectful debate instead of the turning of deaf ears or the downright dismissal of criticisms.

LikeLike

https://en.wikipedia.org/wiki/Sockpuppet_%28Internet%29

LikeLike

https://en.wikipedia.org/wiki/Lie#Butler_lie

LikeLike

Unfortunately as I see it we are being debated with a Gish Gallop (http://www.urbandictionary.com/define.php?term=Gish%20Gallop). The “technical points” brought up by Stellacci’s defender were each answered multiple times and have been re-asked in various guises completely ignoring our arguments to give the appearance of intellectual debate. Yet the arguments that are presented are the sort that I wouldn’t even expect from an undergraduate. It is for this reason I have decided to wait for someone (with a peer number, i.e. an academic) to say they find a point of his convincing. When you say as a non-expert you find his augments convincing? Which ones?

I really don’t like the appearance that we are backing out from a debate. But early this week we spent a disproportionate amount of time, reiterating points already in the paper, and answering a plethora of strange arguments from an anonymous person on an internet forum. Please remember we have put all our data and analysis code online, and have come out ready to debate putting our own name to the discussion. However, how long can one argue with a faceless character on the internet when they seem to wilfully ignore your points? I would happily have this argument in public with Stellacci or one of his co-workers.

I have bowed out from PubPeer for now because I have lots of other work to do. In terms of my research I think of all time I spend on the stripy paper as “wasted” time, as I fail to see how it got through peer review. We only did the work because we felt that the record needed correcting, we were not doing new science but undoing bad science. So please, before you accuse me or Phil (Moriarty) of not engaging, please remember that none of Stellacci’s co-workers have been prepared to come forward and have an open debate.

LikeLike

Julian, if you find the points from the Stellacci defender so easy to refute (you say they “are the sort that I wouldn’t even expect from an undergraduate”), why don’t you prove the defender wrong? As far as I can tell, and I am a established scientist, the defender has been able to refute your points quite convincingly.

But you haven’t convincingly addressed the comments of the defender. For example, the defender argued that all your analysis of artefacts is based on just one image out of many published. Similary, that your analysis of observer bias is also based on one image. And that the spatial features can be discerned from images published across the years by comparing the appropriate features in the PSDs and the width of the stripes.

Your complaint about the anonymity of the defender is not justified. Haven’t you or your colleagues applauded PubPeer as a great peer-review platform? Peer review wouldn’t work well without reviewer anonymity. You should treat the defender as you would treat any peer reviewer at any journal. Not only this is the fair way of having a discussion, but otherwise you are undermining the essence of the PubPeer platform.

You fail to see how the Stellacci work on striped nanoparticles got through peer review. Instead, the way I see it is that having all those 30+ papers gone through peer review while yours hasn’t yet, the burden of proof falls on you. And you mistreat a peer reviewer at PubPeer that happens to challenge your work. That doesn’t speak well of you, and I bet it explains why Stellacci and co-workers don’t engage with you or send you samples while they have sent samples to other labs. What are the chances that all those tens of peer reviewers are wrong and that you, Philip and Raphael are right? I guess most people would put their money with the peer reviewed work.

LikeLike

You ask why don’t I prove them wrong? Did you read the point on the Gish Gallop? Each time “the defender” comes to PubPeer (s)he just repeats arguments from before. And makes a list of claims on things we have already answered in the paper. I can’t see how this takes much time. Yet for me to go, find the section of the paper, and the raw data and dissect the criticism takes me a very long time. I have wasted hours explaining things from the paper and even explaining basic scientific practice only to be ignored. It becomes like debating creationists, a huge time sink with no progress. Other peers have also seemed to come to this conclusion too.

You bring up the one image thing? First, this is the seminal image from which the whole stripy saga got started. I will stop bringing it up when they retract the paper based on it. As for other images we go into detail on the JACS data showing that the ringing in the current image can be identified as strong peaks in the PSD (Figure S2 and S3) which is then used to show that the eye-balling of the “real” stripes in that paper was flawed (Fig 4) as they are within frequency range of the strong oscillations (ringing) in the current image. So their first image was just a feedback artefact, and their later (JACS) data showing they could identify feedback ringing and ignore the ringing was incorrect, the ringing dominates as shown by our analysis. So I ask Stellacci to find me (if he has any) an image with these vivid stripes, with no evidence of ringing and send me the raw data.

Next the PSDs. We have pointed out time and time again on their that the PSDs are fitted using a method which is very easy to bias by changing initial conditions of the fit. And also that to get their agreement they had to exclude data for a main part of the model, still fit the whole model, then use a different parameter than normal from the fit to define the spacing. None of this was mentioned in the paper! This PSD method is simply unreliable. Furthermore, as we have shown in Fig 10 it doesn’t even separate stripes from random speckling.

The points on anonymity really annoys me. I have never sung the praises of PubPeer, others I work with have you can take this up with them. The point people bring up is that anonymity is essential because otherwise young scientists would not feel comfortable disagreeing with papers from prominent groups. I stand here before you a young scientist, with my name and my picture, challenging the work of a very prominent scientists. If I have revealed myself and put my name to my critical work, despite the infancy of my career. Why can’t you, as an “established scientist”, reveal your identity?

You say I should treat the reviewer as I would any reviewer at a journal? A journal has an editor which independently selects an expert in a field, PubPeer allows any scientist with a first or corresponding authorship to get a peer number, they could be a and expert in a very different field or even someone in Stellacci’s group, something which should not be possible via a journal. But this defender does not even have a peer number! Thus, they could be anyone scientist or not, also they may even be you (I am not accusing you, I am just pointing out that such is internet anonymity we have no way to show that sock puppetry is not happening)!

So here I will reiterate. I think anonymity even as done on PubPeer is bad for the scientific debate. Journal peer review is different because it is not a public debate. Though I would argue here that journal peer should be anonymous both ways so that the paper is judged on its content, rather than on how famous its authors are!

LikeLike

Stirling,

Thank you for providing further information. Could you please post this on PubPeer so as not to break down the conversation into pieces at different sites?

I agree with controversy watcher: I am not receiving the best treatment. This behavior speaks at length about you and Moriarty, in particular about how ‘open’ you are to criticisms.

I’ll now respond to your points above:

* The Gish Gallop accusation is unfair. If I am repeating points is because you and Moriarty have not answered them, and they are not answered in the paper. And I have added more criticisms by refuting the arguments in many of your replies. We have not progressed much because you are not able to address most of the points. It thus becomes more and more clear that there are inherent flaws in your arguments. Creationists debate on the basis of arguments that can’t be proved. My arguments are objective, detailed, and can be falsified. You are being unfair and are sweeping most of the criticisms under the rug.

* You have only analyzed one image among many of the 2004 work. The 2004 paper is not based on one image, but on many. You can’t claim that ALL of the 2004 STM work (let alone all of the work on striped nanoparticles) is flawed.

* Referring to the 2006 work published in JACS, you wrote “the ringing in the current image can be identified as strong peaks in the PSD (Figure S2 and S3) which is then used to show that the eye-balling of the “real” stripes in that paper was flawed (Fig 4) as they are within frequency range of the strong oscillations (ringing) in the current image.” I haven’t yet commented on Figure 4, so what I am going to say next is a new criticism:

In your Figure 4 you plot the spatial frequency range for the noise from the tunnel current images corresponding to Figure 3 of the 2006 work (JACS). But you do not say in your paper that the images that you analyze contain both nanoparticles and flat regions (the gold foil substrate), and that the substrate largely dominates (in percentage of image area). So when you analyze the noise, you are mostly analyzing the noise corresponding to the substrate. Stellacci mentions in the paper that when imaging the substrate, the features are tip-speed dependent, which means that the ripples arise from noise, but when imaging the area where the nanoparticles sit, the features are NOT tip-speed dependent, which rules out noise artifacts. So Stellacci shows that stripe features arising from noise can be distinguished from those that are genuine by looking at how the spacing of the stripes changes with tip speed (the spacing doesn’t change for the ripples on the nanoparticles). But because the substrate in the images you analyze dominates, in your Figure 4 you are showing the frequency range of the noise corresponding to the substrate (in fact, the grey area and the red dots correlate and decrease in value with tip speed; this is not the case for the green diamonds and blue triangles, which are largely tip speed independent). Then you assume that this noise would be the same for the nanoparticles. This is wrong. The grey area in your Figure 4 is not the relevant noise for the green diamonds and blue triangles. You should extract the power spectra and relevant spatial frequencies for the nanoparticle regions only (not for the whole image!) to obtain the noise range for the nanoparticles and compare it with the spatial frequency for the nanoparticles (the green diamonds and blue triangles). To sum up this point, you are comparing apples (the noise range for the spatial features of the substrate) with pears (the spatial features of the nanoparticles).

* Continuing with the previous point, there is another misrepresentation in your paper. You wrote “Jackson et al. 2006 [24] also state that the gold foil substrates used in the work have curvature comparable to that of the nanoparticle core. This begs the question as to just how some areas were objectively defined as the surface, and thus exhibited feedback noise, while others were defined as nanoparticles with molecular resolution”. But this sentence quoted from the JACS paper refers to Figure 1, not to Figure 3, which is the one you analyzed. Also, you ‘forgot’ to mention that the curved gold foil substrates do not show any features (just look at the circled area in Figure 1a of the JACS paper), which is not what happens to Figure 3a, where there are clear ripples on the substrates. So you again misrepresent facts to prove your points.

* I have already rebutted your point on the PSDs. In your Fig S5 you haven’t fitted the magenta and red curved properly within the shoulder region. So no, the method is not very easy to bias by changing the initial conditions if you set a low tolerance for the error of the fit. You have set a high tolerance, so of course obtain a large variability in the parameters. Your Figure S5 shows bad usage of statistics.

* You deserve all the respect for putting your name behind your comments, specially because you are a young scientist. That’s exemplary. But given your behavior and that of Moriarty and Levy on this blog and on PubPeer, you have to understand why others would prefer to remain anonymous. Moreover, this is peer review, so anonymity is the norm. Deal with it.

In conclusion, in your paper you hand picked figures, used arguments inconsistently, performed unfair comparisons, misrepresented claims from published papers, performed lousy statistics, and used all these to make bold and sweeping claims.

LikeLike

controversy watcher,

I really haven’t dug very far into some of Julian and Phillip’s arguments, to be honest. All I need to know is stated many times in the pubpeer thread:

1. There is a categorical difference in image quality between 2004 2006 papers vs. 2013 (refs 23, 24 vs. ref 38) and ; this is not explained by Stellacci and co. There are clear stripes in the 2004 and 2006 papers, there are no clear stripes in the 2013 paper. There is a very credible argument that the STM was not performed correctly in (at least) the 2004 and 2006 papers. There is a consensus that the STM in the 2013 paper was done correctly. It is very hard to believe that the difference in image quality is due to the choice of ligand used in the 2013 paper (and easy to address, as shown in the next point).

2. Stellacci has not shared a (what I imagine would be easy to make) batch of nano particles used in refs 23,24 and imaged them with the expert groups that contributed to the 2013 paper or with Moriarity. This makes no sense, synthesizing the particles would take far less time than all the man hours that have been consumed in this debate. High quality images of the particles used in the 2004 and 2006 paper would essentially settle the debate here. No one seems to predict (even Stellacci’s proponent in the pubpeer thread) that one could expect the images to be consistent.

3. There is a credible report by Predrag Djuranovic (*who was a graduate student of Stellacci’s*) which says that he reported the “stripes” as imaging artifacts long ago in 2005.

In my mind this evidence is clearly sufficient to call for critical look at reproducibility and validity with (at least) the 2004 and 2006 papers. This could be easily addressed by Stellacci sharing a material sample. The fact that a material sample hasn’t been shared appropriately (after months of waiting) is troubling.

LikeLike

For anyone new to the story, the categorical difference in images is illustrated on this blog:

LikeLike

Nanonymous, I’ll answer your points in the best of my ability:

1. The image quality in the 2013 papers is far better. But what you see there are the fingerprints of individual ligands, not coarse-grained contours, as the 2013 images come from high-resolution microscopy. If you look at Fig. 7b in Julian’s paper, you’ll see clear stripes. The comparison at https://raphazlab.files.wordpress.com/2013/11/moriarty-comparison.jpg shows contours on the left and ligands on the right. A fairer comparison to the 2004 image would be the images in Fig. 1 of Biscarini et al.

2. As far as I can see, Stellacci did share the samples with Renner and De Feyter. You can find the comparison of images taken at the different labs in Fig. 1 of Biscarini et al.

3. I am not sure how much credibility Predrag Djuranovic has… did he graduate? Has he published any papers on nanoparticle imaging? I can’t find them.

As I see it, Stellacci has sent samples to other labs, just not those of Moriarty or Levy, which I would say is not that surprising given the way they have behaved on PubPeer and on this blog.

LikeLike

The 2004 images are feedback ringing artifacts. The 2013 images don’t show visible stripes.

Not sharing the particles with his critics is scientifically dishonest. You don’t just share with people that are friendly to you.

I don’t think Drago’s background is very impressive, but so what. His results (see the post) are very clear. You can generate stripy images on bare gold foil! (And Raph and Moriarty have impressive STM backgrounds.)

LikeLike

controversy watcher,

1. I don’t understand what you are saying here. Are you saying that a high resolution image of a whole particle somehow doesn’t capture the contour that you would see in a low resolution image? The contrast appears large in the 2004/2006 papers, getting the same image from high resolution images should just be a matter of subsampling and (perhaps) anti-aliasing. I’ve never heard of a case where an accurate high resolution image of something doesn’t contain the at least as much information as a low resolution image of the same thing. What is a “fingerprint” of a ligand?

2. I haven’t seen a “good” image by, say, Renner beside a “bad” by Stellacci side by side of the same particle type (though perhaps I am getting confused with the different ligand types somewhere, I don’t have access to all of the papers). Phillip addresses this in: https://raphazlab.wordpress.com/2013/11/14/the-emperors-new-stripes/#comments

There simply aren’t stripes in the high quality image, Stellacci’s position seems to be that you can’t do a comparison because the ligands are different (see post). This doesn’t seem like a credible idea. I quote Phillip’s response here, to support the idea that we don’t see stripes when high quality images are taken and we have not yet seen high quality images of the particles used in the 2004 and 2006 papers:

“The onus is on *you* to use the same particles and to do the direct comparison. There’s a very simple way to prove me wrong – send the same type of nanoparticles used for the Nature Materials 2004 paper to Christoph and have his group show that it’s possible to get images where the contrast is the same as in the Nature Materials paper while the tunnel current is being tracked reliably . *Then, and only then, will I modify the post at Raphael’s blog.* Do this and not only will I retract that blog post but I’ll donate £1000 to a charity of your choice.“

3. I don’t know Predrag, but I’ve seen the narrative of of a student challenging the pet hypothesis of an advisor before; his credibility certainly isn’t lower in my eyes because of this (given what I know from his testimony on this blog). Given what Julian and Phillip have demonstrated so far, it seems clear to me that that Predrag was correct in challenging the notion of stripes. I can’t help but admire someone who (presumably) called out what he saw was wrong.

LikeLike

@controversy watcher (Jan 11 2014, 11:14 AM)

You are perhaps not aware of the detailed history associated with this debate and controversy. I am hoping that if I make you aware of some aspects of the ‘back-story’ that the level of frustration in some of my comments over at PubPeer might be, if not justified, at least explained to some degree. Note, however, that the “Unregistered Submission” who has attempted to justify Stellacci et al.’s striped nanoparticle work has hardly been the model of restraint and diplomacy in their comments.

Nonetheless, I have apologised to “Unregistered” for my somewhat overwrought tone. Here are some of the reasons the palpable irritation in some of my early comments on the PubPeer site:

– None of the ‘criticisms’ raised by “Unregistered” had not been dealt with in some detail in our paper. There was a willful misreading/misrepresentation of our work in many of Unregistered’s comments.

– Despite being asked (by commentators at PUbPeer other than Julian and myself) to give at least some hints of their scientific background (without revealing their identity, of course), “Unregistered” did not do this. They then went on to demonstrate a remarkable lack of understanding of basic experimental protocols in their comments. I stress that this is not just my opinion – you can see for yourself that a number of PubPeer commenters have been gobsmacked at Unregistered’s lack of understanding of, for example, signal averaging.

– The suggestion that we should “do more experiments” to show that Stellacci et al’s data and analyses are fundamentally flawed is, to put it mildly, very unfair. I discuss this point in a blog post uploaded a few minutes ago: https://raphazlab.wordpress.com/2014/01/12/whither-stripes/

and in our detailed response to all of Unregistered’s points at PubPeer: https://pubpeer.com/publications/B02C5ED24DB280ABD0FCC59B872D04/comments/5593

– We have had to expend a vast amount of time and effort trying to get even a small subset of the raw data from Francesco Stellacci for analysis, including contacting journal editors. This is not how science should work and I find the implications of the entire stripy saga for science and the peer review process to be rather depressing. This may well also account for the rather aggrieved ‘undercurrent’ of my initial contributions to the debate at PubPeer.

I agree entirely and this is why you’ll see that after I apologised to “Unregistered” at PubPeer, I have ignored any attempts to get a rise out of me (e.g. “Elementary, my dear Moriarty”). I hope that you will find our detailed rebuttal of all of Unregistered arguments at the following links to be convincing.

https://pubpeer.com/publications/B02C5ED24DB280ABD0FCC59B872D04/comments/5593

My very best wishes,

Philip

LikeLike

1. FS won’t share the nanoparticles with his critics.

2. Modern, high quality pictures don’t show visible stripes and look nothing like the original ones.

3. The original pictures were taken with very poor imaging techniques, well known to create artifacts.

It’s Shroud of Turin silliness. You stick to your guns Phil and Julian. It’s crazy that 30 papers have been built on this edifice.

LikeLike

thanks Nony… but what happens next? What do you suggest?

LikeLike

Keep hammering at it. These people have put out 30 papers and continue to do so. Go synthesize the particles and image them. Just keep after it. Really, is important what you are doing as an object lesson for all fo science.

And if the synthesis is tricky or the like, so what. Just do your best and if they claim you did not follow right (unwritten steps) than just…it shows they are not sharing methods properly and also calls out their not sharing particles.

Please…don’t stop.

There are mistakes in crystallography or particle science or even organic chemistry. But not this level of 30 papers and Science/Nature silliness like in “nanoscience”. Really, it’s exactly what I was seeing in the mid 90s.

Keep after it and just enjoy yourselves doing work on the synthesized particles. (Heck, it’s another paper. ;-))

LikeLike

I finally read and understood the three panel image from Drago. For some reason, I thought it was too technical, before, but it’s really very simple and damning.

1. Figure a shows computational image with feedback ringing on clean conducting sphere (RIPPLED STRIPES SEEN).

2. Figure b is an experimental STM of a clean bumpy gold surface (RIPPLED STRIPES SEEN).

3. Figure c is the FS Nature 2004 image (RIPPLED STRIPES SEEN).

All these “stripes” look very similar, thick, rippled lines.

Then in the 2013, high quality STM images (no feedback, cold, vacuum), FS special selected collaborators: NO VISIBLE STRIPES. They look like clean little eggs. No hair or anything on them.

FS has built a house of cards…

LikeLike

@Unregistered Submission (Jan 13, 1:28 AM)

1. As regards addressing your points, please see https://raphazlab.wordpress.com/2014/01/12/whither-stripes/ and

https://pubpeer.com/publications/B02C5ED24DB280ABD0FCC59B872D04/comments/5593

2.“* You have only analyzed one image among many of the 2004 work”

As both Julian and I have stated repeatedly, that particular image is the *seminal* image from which the misinterpretation of feedback loop ringing as stripes originated. It calls into question all of the other images in the Nature Materials paper, and the follow-up papers where even Stellacci admits ringing was a key contribution to the image.

It took a huge amount of effort to secure the tunnel current map for that particular image from Francesco Stellacci. This is not how science should work. The obligation is on Stellacci to show that the rest of the images are not tainted by feedback loop ringing.

3. As regards the “distinction” of “real stripes” from feedback loop ringing in Jackson et al 2006 and Hu et al. 2009, which we have dealt with multiple times now, it might be instructive to remind you of the type of image quality that was used to extract stripe ‘spacings’ for the ‘quantitative’/’statistical’ analysis, as Stellacci et al described it, in those papers. See https://raphazlab.files.wordpress.com/2013/05/uninterpolated.jpg

All other points we have dealt with on multiple occasions.

I would appreciate it if you could explain why,as I describe in the “Whither stripes” blog post linked to above, Ong et al. claim that the spacing of the ‘stripes’ in Fig. 3 of their paper is 1.2 nm when simple measurement using a ruler shows that the spacing is instead 1.6 nm.

4. Note that you chastise us for the tone of our comments. As you know, I have admitted that this was the case for one of my posts and have apologised.

However, you are certainly not always particularly restrained in your language:

As I have said before, it is best left to the readers of our paper, of Raphael’s blog, and of the PubPeer comments thread to decide as to whether or not your accusations in the paragraph above accurately represent the situation.

Good night.

Philip

LikeLike

Back in 2005, I read Nature Materials referees’ feedback. One of them clearly stated something along these lines: “even though STM images are most likely imaging artifacts, we certainly hope that we will see in the near future more experimental evidence to support the proposed ordered phase separation on the surface of nanoparticles. However, due to a potential high impact of authors’ findings, we recommend immediate publication”. While I do not have this document at hand, perhaps Nature Materials editorial board can publish referees’ reports and explain why did they publish the article in which even referees recognized experimental poor practice and errors.

LikeLike